Published January 23, 2026

LLM Benchmarking for In-Context Retrieval and Reasoning in Incident Root Cause Analysis

When vibe eval falls short

When we first started as a company, we picked LLMs the way most startups do: vibes. A new model was released, a few engineers would run it through their own workflows, and if it “felt better,” we would deploy. Most teams building with LLMs still operate this way, especially as frameworks struggle to keep pace with the rate of model releases.

“Vibe eval” worked when we were lean and iterating rapidly, but as our use cases expanded and our customer base grew, systematic evaluation became critical. A model that felt better in one workflow could quietly add regressions in another. Regressions had tangible consequences: slower incident diagnosis, eroded customer trust. At the same time, choices grew more complex: reasoning depth vs speed, context size vs cost. The combinatorics of matching models to use cases started to break down.

So we invested in evaluation properly: a dedicated team building a rigorous, multi-dimensional testing framework.

That decision paid off quickly. As new models like GPT-5, GPT-5.1, Opus-4.5, and Sonnet-4.5 emerged, internal opinions diverged. Half of the team felt one model performed better based on their day-to-day usage, while the other half believed another should be the right choice based on standard benchmark numbers. However, everyone agreed that neither the hands-on vibes nor the benchmark-driven vibes reliably reflected performance on root cause analysis for complex incidents, where models must reason over incomplete telemetry, evolving system state, and tight latency constraints.

Our evaluation approach

We approached evaluation as an applied AI research problem, grounded in the realities of operating large-scale production systems.

Our domain spans petabytes of highly sensitive telemetry and code that no company can reasonably publish, making public benchmarks infeasible. Despite the lack of public benchmarks, we had a key advantage: access to real production incidents from our customer deployments—messy, heterogeneous data ranging from incomplete logs to inconsistent system states that we would encounter in production. Rather than abstracting these away, we chose to build evaluations directly on this data in spite of the added complexity of privacy controls, PII handling, and governance. If our product had to generalize across architectures and operating patterns, it needed to be tested under real conditions. By grounding evaluation in the same infrastructure used to investigate real incidents, the system itself becomes a reference environment for assessing whether models can reason effectively under real-world constraints.

Building on this foundation, we focused the evaluation on a specific capability of LLMs central to our use case: in-context retrieval and reasoning for incident root cause analysis. This stage occurs late in the incident-response pipeline, after a swarm of agents has narrowed the search space from billions of telemetry signals to a focused candidate set on the order of hundreds through multiple rounds of retrieval and reasoning. At this point, the model is provided with an incident description and the Traversal-identified subset of telemetry signals, and must select the relevant evidence and reason correctly to the underlying root cause.

“Traversal has built a highly sophisticated system for evaluating operational reasoning directly within real incident-response workflows. By grounding evaluations in real production data, they’re able to assess how models—including GPT-5.2—perform under real-world constraints,” said Marc Manara, Head of Startups at OpenAI. “This systems-level approach and architectural depth are critical for building differentiated systems and for continuously improving enterprise AI as the pace of model improvements accelerates.”

For each incident, each model generated a diagnosis. Rather than a syntactic comparison, we evaluated whether the model’s reasoning and conclusion matched what senior SREs ultimately determined. Traversal SRE researchers and customer SREs manually validated a randomly sampled subset of judgments, and then ported the evaluation to an LLM judge to evaluate at scale.

As we iterated on this framework, four factors consistently emerged as the ones that mattered most in production:

Accuracy: Getting the answer right is non-negotiable, but defining ‘right’ in RCA isn’t straightforward.

Speed: Latency compounds in high-stakes incidents. Humans tolerate latency from other humans but less so from software, creating asymmetric latency expectations that are painfully visible during outages.

Token count: Larger context windows let models ingest more telemetry and logs without truncation, but this comes with increased latency and cost tradeoffs.

Comprehensiveness: Beyond accuracy, responses must be actionable: citation-backed and contextualized.

Results

Our evaluation framework allowed us to treat new model releases as reruns of the same benchmark, delivering automated statistical confidence in minutes rather than what typically takes days with vibe-based eval, and enabling direct comparison across model generations.

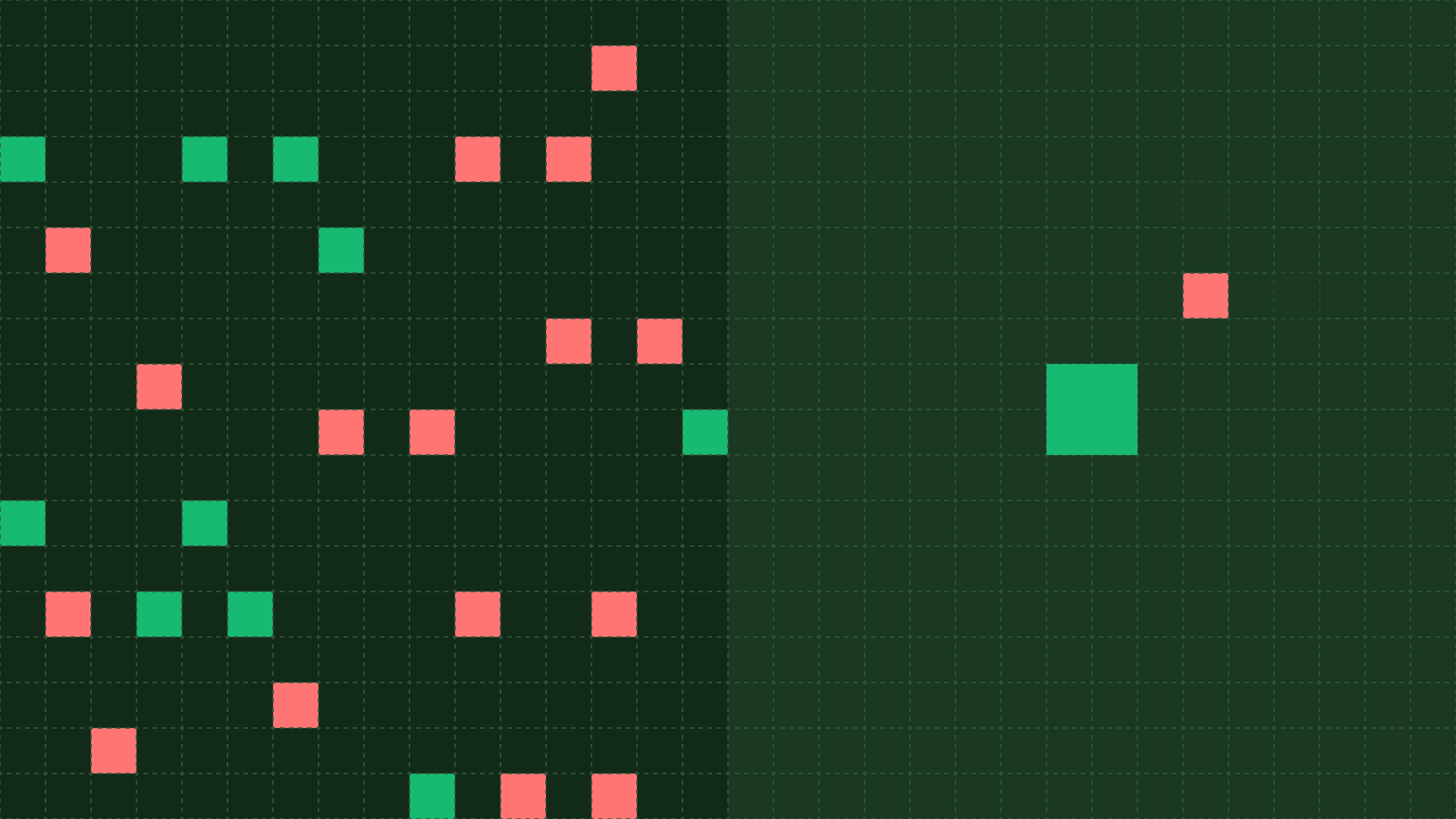

With the introduction of GPT-5.2, we evaluated it against other frontier models available at the time of evaluation, including GPT-5.1, Opus-4.5, and Sonnet-4.5. (Note: Gemini was excluded from this round due to rate limiting issues that prevented it from handling our request volume.)

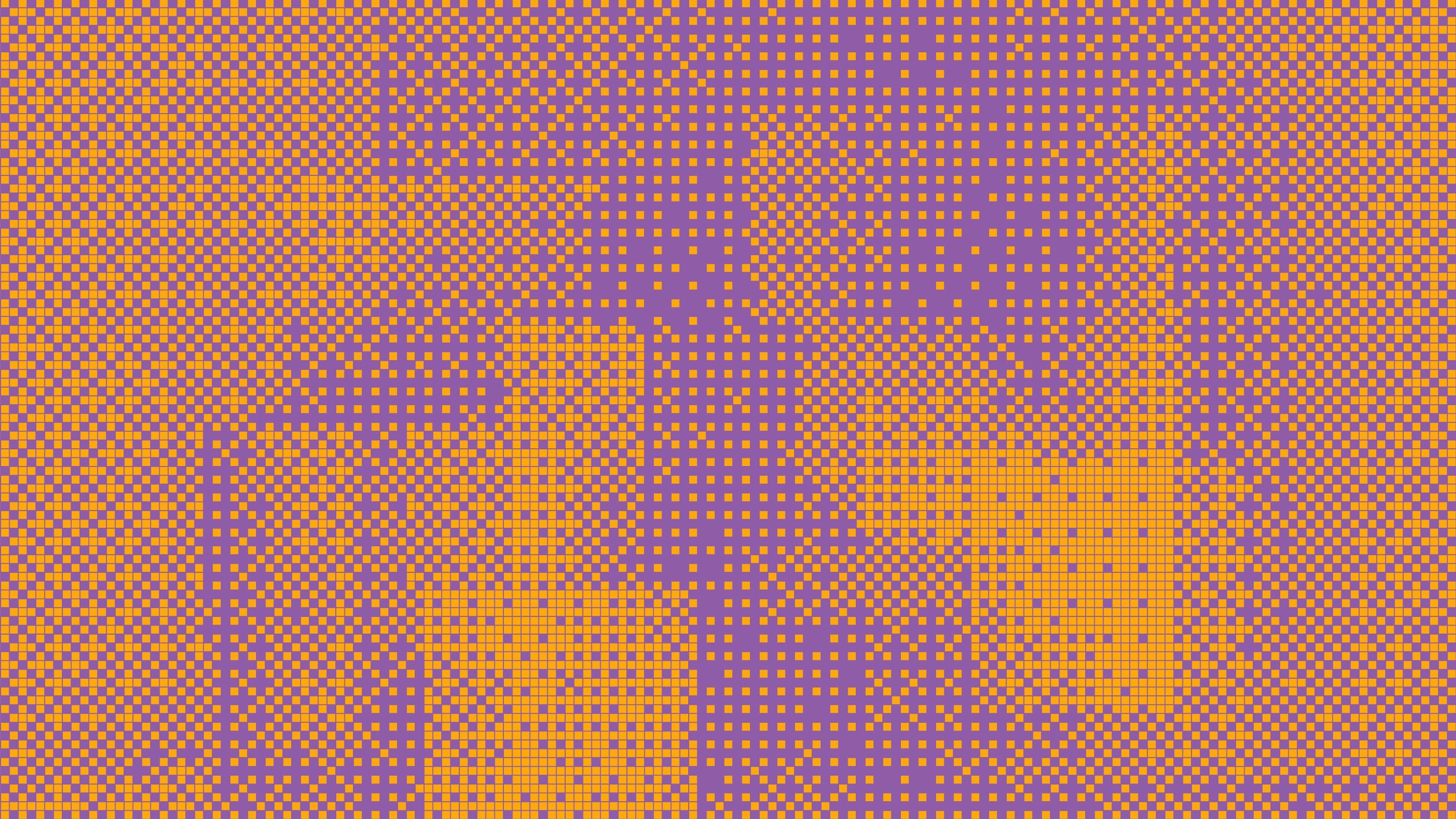

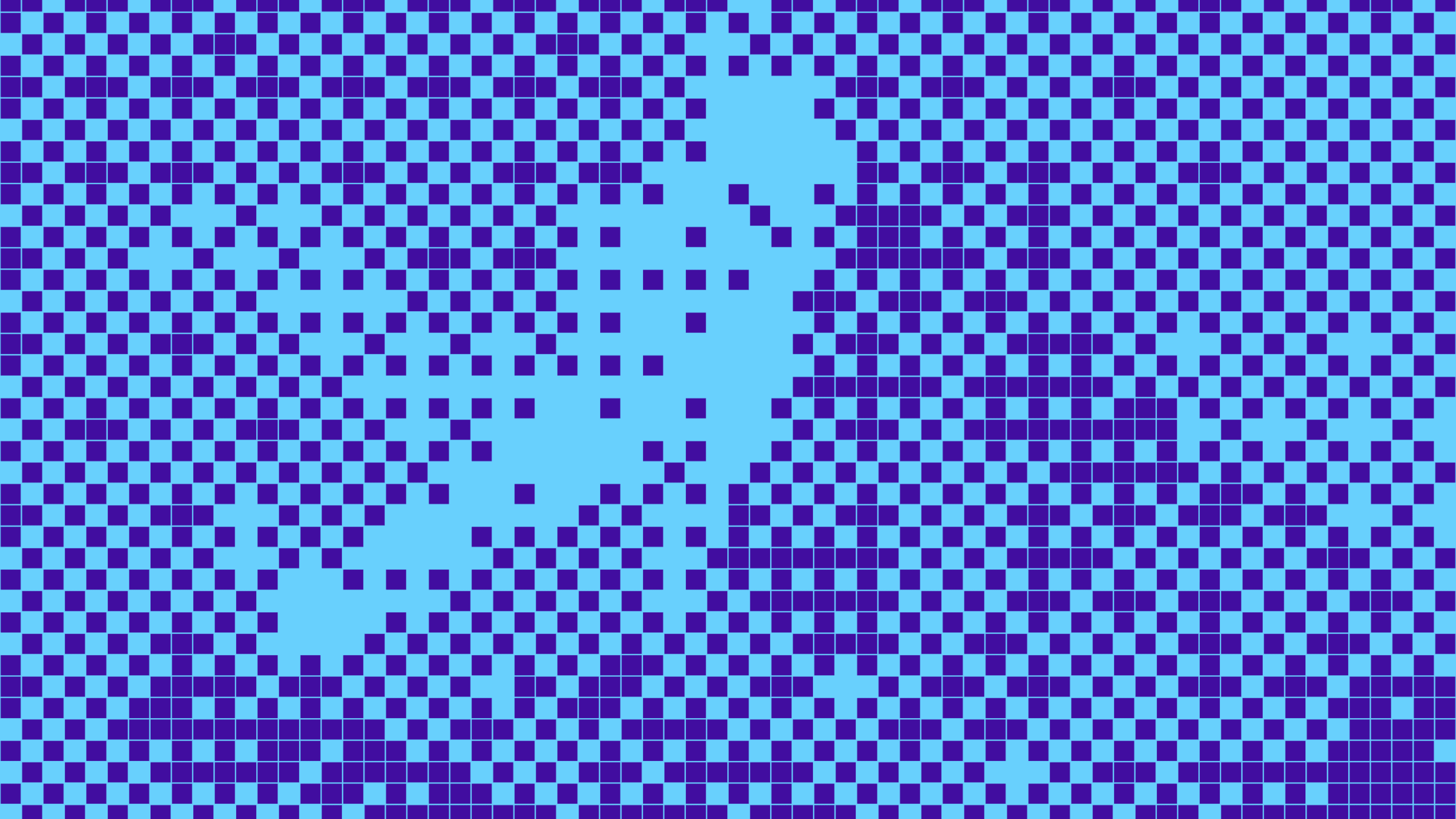

Across real production incidents for this particular use case, GPT-5.2-default emerged as the overall winner. GPT-5.2-default delivered the strongest overall performance, combining higher diagnostic accuracy with practical latency. While GPT-5.1 remained very fast, GPT-5.2 consistently produced better root cause analyses.

Looking within the GPT-5.2 family, we saw a consistent pattern rather than a single dominant configuration. Different reasoning variants performed well along different dimensions, forming a clear accuracy-latency tradeoff curve. Medium-reasoning configurations narrowly led on aggregate score, while lower-reasoning variants responded faster and won more individual incidents. Higher-reasoning variants offered deeper analysis but at latencies that were less practical for live incident response.

This tradeoff was especially clear in a head-to-head comparison between GPT-5.2-none and GPT-5.2-med. Both variants produced similarly accurate diagnoses that narrowed the blast radius enough to page the correct team. The difference was speed: GPT-5.2-none responded nearly twice as fast on average. In practice, that latency gap directly translated to faster MTTR and reduced customer impact.

Across model generations, the same pattern held. Increasing reasoning depth did not meaningfully improve the accuracy for this evaluation, but it consistently increased response time. While the models evolved, the operational conclusion remained unchanged.

What’s next

Whether you’re building AI for production or any other LLM-powered product, a few lessons generalize. Intuition-driven development works early, especially when you’re moving fast and learning what matters, but delayed systematization creates unnecessary risk as stakes rise. Customer-specific evaluation isn’t optional. General benchmarks measure general capabilities, but won’t reveal your product’s specific failure modes. And vibes can only get you so far.

For us, shifting from vibe-based evaluation to an evidence-driven system unlocked an institutional capability to continuously adapt as models evolve. While this post focused on a single capability, our growing set of internal benchmarks makes one thing clear—the “best” model varies meaningfully across tasks, and that choice can change quickly.

We’ll share more of our benchmarking work and findings in future posts. The pace of progress in AI is accelerating, and staying ahead requires systems that can keep up.

Interested in seeing Traversal in action? Book a demo today.